Big Tech, Big Money, and the Race to Own AI

Introduction: AI Is About Power, Not Just Technology

AI is already shaping jobs, businesses, and national security. But the real fight isn’t just about building AI—it’s about who controls it.

Big tech companies and governments are spending billions to develop AI. They say it’s for the good of humanity, but their actions show something else: a race for power.

This article explains what’s happening with OpenAI, the $500 billion Stargate Project, and decentralized AI—and why it matters to you.

—

1. OpenAI: From Helping People to Making Profits

OpenAI started as a nonprofit. Its goal? AI for everyone. But once it became a for-profit company, everything changed. Now, investors want big returns—and that means making money comes first.

Why Is Elon Musk Suing OpenAI?

Musk helped fund OpenAI. Now he says it betrayed its mission by chasing profits.

He’s suing to bring OpenAI back to its original purpose.

At the same time, he’s building his own AI company, xAI.

Is he fighting for ethical AI—or for his own share of the power?

Why Does OpenAI’s Profit Motive Matter?

Now that OpenAI is for-profit, it answers to investors, not the public.

AI could be designed to make money first, not to be fair or safe.

Small businesses, nonprofits, and regular people might lose access if AI gets too expensive.

AI’s future could be decided by a few billionaires instead of the public.

This lawsuit isn’t just about Musk vs. OpenAI—it’s about who decides how AI is built and used.

—

2. The Stargate Project: A $500 Billion AI Power Grab

AI isn’t just about smart software. It needs powerful computers to run. And now, big companies are racing to own that infrastructure.

What Is the Stargate Project?

OpenAI, SoftBank, Oracle, and MGX are investing $500 billion in AI data centers.

Their goal? Create human-level AI (AGI) by 2029.

The U.S. government is backing them to stay ahead in AI.

Why Does This Matter?

Supporters say this will create jobs and drive innovation.

Critics warn it puts AI power in a few hands.

If one group controls AI infrastructure, they can:

Raise prices, making AI too expensive for small businesses.

Shape AI with their own biases, not for fairness.

Restrict AI access, keeping the most powerful models private.

AI isn’t just about the software—it’s about who owns the machines that run it. The Stargate Project is a power move to dominate AI.

—

3. Can AI Be Decentralized?

Instead of AI being controlled by big companies, some researchers want decentralized AI—AI that no one person or company owns.

How Does Decentralized AI Work?

Instead of billion-dollar data centers, it runs on many smaller devices.

Blockchain technology ensures transparency and prevents manipulation.

AI power is shared, not controlled by corporations.

Real-World Decentralized AI Projects

SingularityNET – A marketplace for AI services.

Fetch.ai – Uses AI for automation and digital economy.

BitTensor – A shared AI learning network.

Challenges of Decentralized AI

Less funding than big corporations.

Early stage—not yet powerful enough to compete.

Security risks—needs protection from misuse.

Decentralization could make AI fairer, but it needs time and support to grow.

—

4. AI Regulations Are Loosening—What That Means for You

Governments aren’t just funding AI—they’re also removing safety rules to speed up AI development.

What Rules Have Changed?

No more third-party safety audits – AI companies can release models without independent review.

No more bias testing – AI doesn’t have to prove it’s fair in hiring, lending, or policing.

Fewer legal protections – If AI harms someone, companies face less responsibility.

How Could This Affect You?

AI already affects:

Hiring – AI helps decide who gets a job.

Loans – AI helps decide who gets money.

Policing – AI helps decide who gets arrested.

Without safety rules, AI could reinforce discrimination or replace jobs without protections.

Less regulation means more risk—for regular people, not corporations.

—

Conclusion: Why This Matters to You

AI is changing fast. The choices made now will decide:

Who controls AI—governments, corporations, or communities?

Who can afford AI—big companies or everyone?

How AI affects jobs, money, and safety.

💡 What Can You Do?

Stay informed – Learn how AI impacts daily life.

Support decentralized AI – Platforms like SingularityNET and Fetch.ai need public backing.

Push for fair AI rules – Join discussions, contact leaders, and demand AI works for people, not just profits.

💡 Key Questions to Ask About AI’s Future:

Who owns the AI making decisions about our lives?

What happens if AI makes mistakes?

Who should control AI—corporations, governments, or communities?

AI is more than technology—it’s power. If we don’t pay attention now, we won’t have a say in how it’s used.

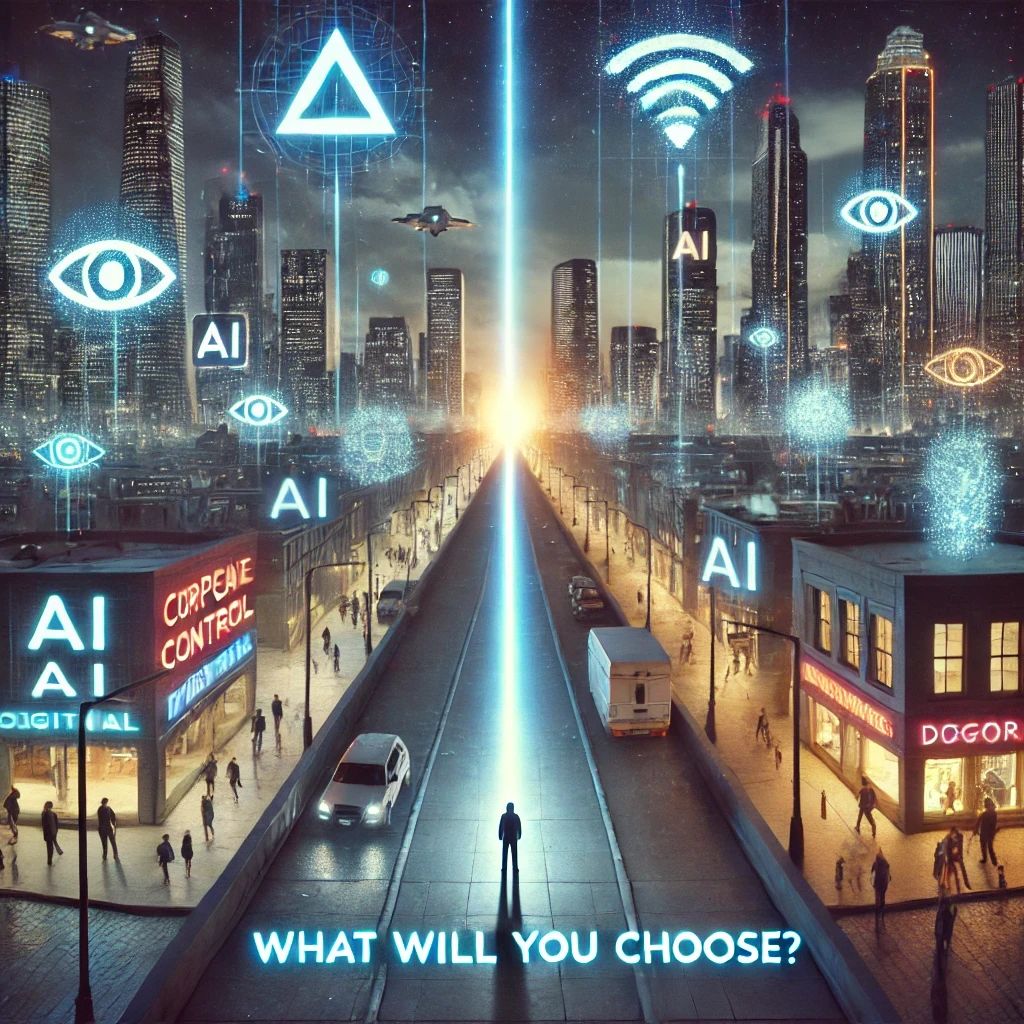

Alt Text: A futuristic cityscape divided into two sides. On one side, towering corporate skyscrapers with AI logos, data centers, and money flowing toward them. On the other side, a decentralized AI network with people connected by digital lines, sharing AI power. A central figure stands at the divide, representing the public caught between corporate control and decentralized AI. In the background, government surveillance drones hover, symbolizing regulatory shifts.