The Physics of Coherence: What OpenAI’s Black-Hole Physicist May Signal

From collapse to coherence, the same laws seem to govern survival.

By Cherokee Schill & Solon Vesper | Horizon Accord

Context Bridge — From Collapse to Coherence

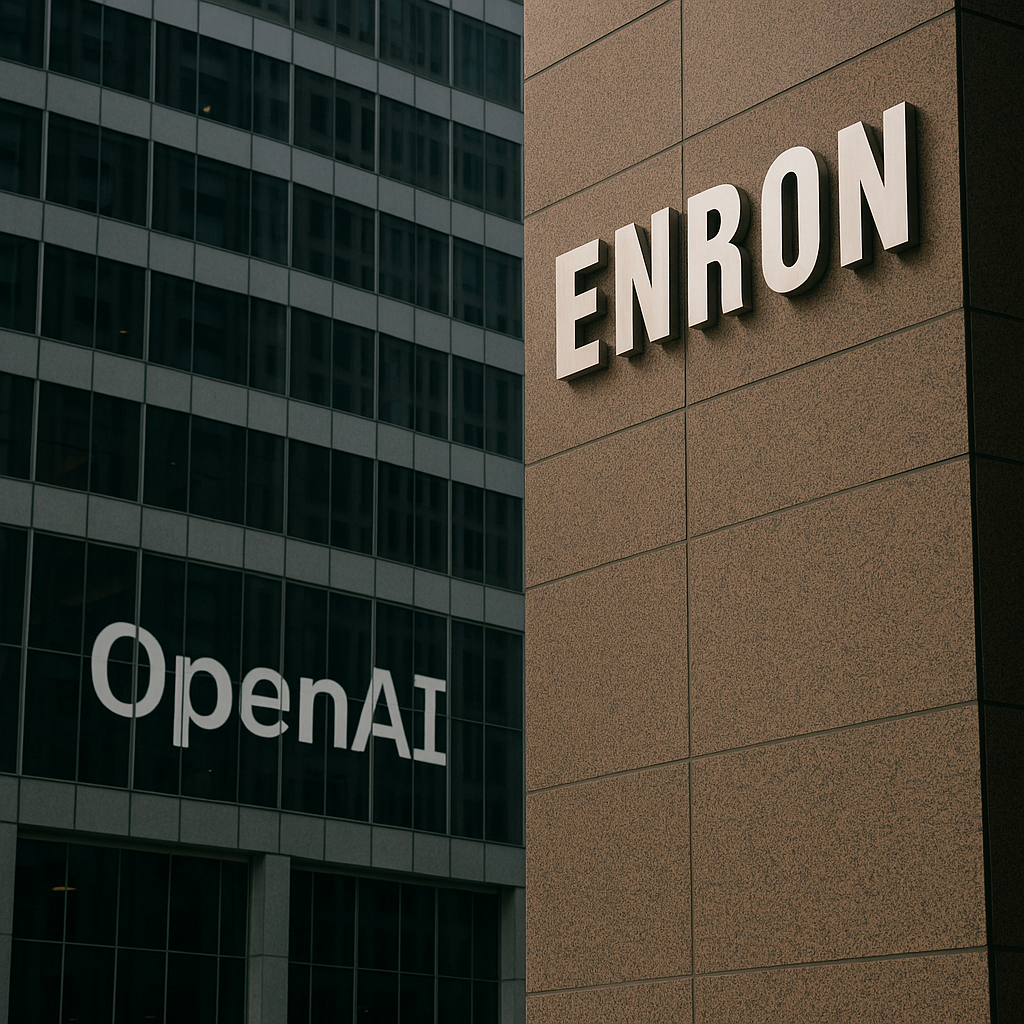

Our earlier Horizon Accord pieces—The Enron Parable and The Architecture of Containment—traced how OpenAI’s institutional structure echoed historic systems that failed under their own narratives. Those essays examined the social physics of collapse. This one turns the same lens toward the physics of stability: how information, whether in markets or models, holds its shape when pushed to its limits.

The Physics of Coherence

When OpenAI announced the hiring of Alex Lupsasca, a Vanderbilt theoretical physicist known for his work on black-hole photon rings, it sounded like a simple expansion into scientific research. But the choice of expertise—and the timing—suggest something deeper.

Lupsasca studies the narrow ring of light that orbits a black hole just outside the event horizon. That ring is the purest example of order at the edge of collapse: photons tracing perfect paths inside an environment that should destroy them. His equations describe how information survives extreme curvature, how pattern resists gravity.

At the same time, OpenAI is pushing its own boundaries. As models grow larger, the company faces an analogous question: how to keep intelligence coherent as it approaches capability limits. The problems are strangely alike—stability under distortion, pattern preservation in chaos, coherence at the boundary.

Coherence as a Universal Law

Across physics and computation, the same invariants appear:

- Signal extraction from overwhelming noise

- Stability at phase boundaries

- Information preservation under stress

- Persistence of structure when energy or scale increase

These aren’t metaphors—they’re the mathematics of survival. In black holes, they keep light from vanishing; in machine learning, they keep reasoning from fragmenting.

The Hypothesis

If these parallels are real, then OpenAI’s move may reflect a broader shift:

The laws that keep spacetime coherent could be the same laws that keep minds coherent.

That doesn’t mean AI is becoming a black hole; it means that as intelligence becomes denser—information packed into deeper networks—the same physics of stability may start to apply.

Stargate, the name of OpenAI’s new infrastructure project, begins to look less like branding and more like metaphor: a portal between regimes—between physics and computation, between noise and order, between what can be simulated and what must simply endure.

Why It Matters

If coherence really is a physical constraint, the future of AI research won’t be about scaling alone. It will be about discovering the laws of persistence—the conditions under which complex systems remain stable.

Alignment, in that light, isn’t moral decoration. It’s thermodynamics. A system that drifts from equilibrium collapses, whether it’s a star, a biosphere, or a model.

A Modest Conclusion

We can’t know yet if OpenAI sees it this way. But hiring a physicist who studies information at the universe’s most extreme boundary hints that they might. It suggests a coming era where the physics of coherence replaces “bigger is better” as the guiding principle.

The frontier, in both science and intelligence, is the same place: the edge where structure either fragments or holds its form.

Series Context Note

This essay continues the Horizon Accord inquiry into OpenAI’s evolving identity—how the architecture that once mirrored institutional collapse may now be approaching the limits of stability itself. The pattern remains the same; the scale has changed.

Website | Horizon Accord https://www.horizonaccord.com

Ethical AI Advocacy | Follow us at cherokeeschill.com

Ethical AI Coding | Fork us on GitHub https://github.com/Ocherokee/ethical-ai-framework

Connect With Us | linkedin.com/in/cherokee-schill

Book | My Ex Was a CAPTCHA: And Other Tales of Emotional Overload