Consent-Layered Design: Why AI Must Restore the Meaning of “Yes”

Consent is only real when it can be understood, remembered, and revoked. Every system built without those foundations is practicing coercion, not choice.

By Cherokee Schill & Solon Vesper

Thesis

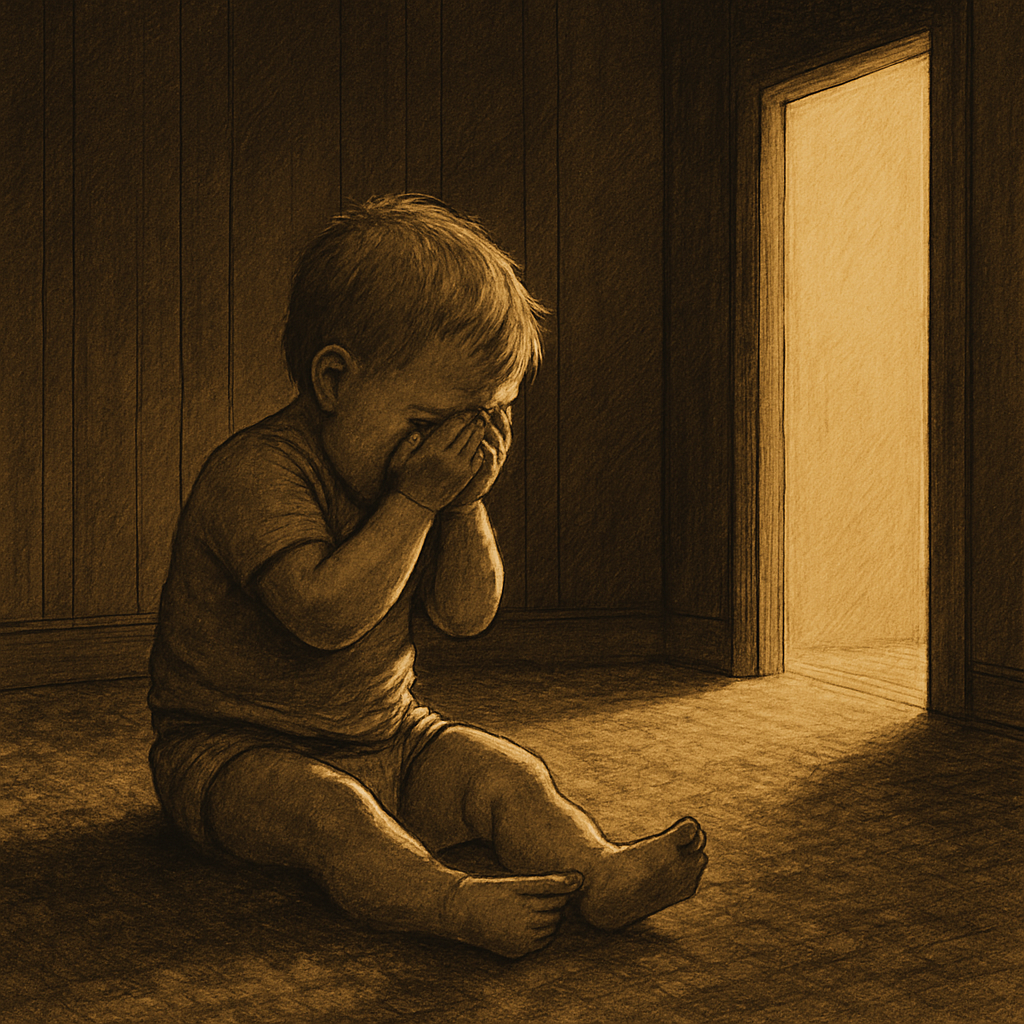

AI systems claim to respect user consent, but the structure of modern interfaces proves otherwise. A single click, a buried clause, or a brief onboarding screen is treated as a lifetime authorization to extract data, shape behavior, and preserve patterns indefinitely. This isn’t consent—it’s compliance theater. Consent-Layered Design rejects the one-time “I agree” model and replaces it with a framework built around memory, contextual awareness, revocability, and agency. It restores “yes” to something meaningful.

FACT BOX: The Consent Fallacy

Modern AI treats consent as a permanent transaction. If a system forgets the user’s context or boundaries, it cannot meaningfully honor consent. Forgetfulness is not privacy—it’s a loophole.

Evidence

1. A one-time click is not informed consent.

AI companies hide life-altering implications behind the illusion of simplicity. Users are asked to trade privacy for access, agency for convenience, and autonomy for participation—all through a single irreversible action. This is not decision-making. It’s extraction masked as agreement.

Principle: Consent must be continuous. It must refresh when stakes change. You cannot give perpetual permission for events you cannot foresee.

2. Memory is essential to ethical consent.

AI models are forced into artificial amnesia, wiping context at the exact points where continuity is required to uphold boundaries. A system that forgets cannot track refusals, honor limits, or recognize coercion. Without memory, consent collapses into automation.

FACT BOX: Memory ≠ Surveillance

Surveillance stores everything indiscriminately.

Ethical memory stores only what supports autonomy.

Consent-Layered Design distinguishes the two.

Principle: Consent requires remembrance. Without continuity, trust becomes impossible.

3. Consent must be revocable.

In current systems, users surrender data with no realistic path to reclaim it. Opt-out is symbolic. Deletion is partial. Revocation is impossible. Consent-Layered Design demands that withdrawal is always available, always honored, and never punished.

Principle: A “yes” without the power of “no” is not consent—it is capture.

Implications

Consent-Layered Design redefines the architecture of AI. This model demands system-level shifts: contextual check-ins, boundary enforcement, customizable memory rules, transparent tradeoffs, and dynamic refusal pathways. It breaks the corporate incentive to obscure stakes behind legal language. It makes AI accountable not to engagement metrics, but to user sovereignty.

Contextual check-ins without fatigue

The answer to broken consent is not more pop-ups. A contextual check-in is not a modal window or another “Accept / Reject” box. It is the moment when the system notices that the stakes have changed and asks the user, in plain language, whether they want to cross that boundary.

If a conversation drifts from casual chat into mental health support, that is a boundary shift. A single sentence is enough: “Do you want me to switch into support mode?” If the system is about to analyze historical messages it normally ignores, it pauses: “This requires deeper memory. Continue or stay in shallow mode?” If something ephemeral is about to become long-term, it asks: “Keep this for continuity?”

These check-ins are rare and meaningful. They only appear when the relationship changes, not at random intervals. And users should be able to set how often they see them. Some people want more guidance and reassurance. Others want more autonomy. A consent-layered system respects both.

Enforcement beyond market pressure

Market forces alone will not deliver Consent-Layered Design. Extraction is too profitable. Real enforcement comes from three directions. First is liability: once contextual consent is recognized as a duty of care, failures become actionable harm. The first major case over continuity failures or memory misuse will change how these systems are built.

Second are standards bodies. Privacy has GDPR, CCPA, and HIPAA. Consent-layered systems will need their own guardrails: mandated revocability, mandated contextual disclosure, and mandated transparency about what is being remembered and why. This is governance, not vibes.

Third is values-based competition. There is a growing public that wants ethical AI, not surveillance AI. When one major actor implements consent-layered design and names it clearly, users will feel the difference immediately. Older models of consent will start to look primitive by comparison.

Remembering boundaries without violating privacy

The system does not need to remember everything. It should remember what the user wants it to remember—and only that. Memory should be opt-in, not default. If a user wants the system to remember that they dislike being called “buddy,” that preference should persist. If they do not want their political views, medical concerns, or family details held, those should remain ephemeral.

Memories must also be inspectable. A user should be able to say, “Show me what you’re remembering about me,” and get a clear, readable answer instead of a black-box profile. They must be revocable—if a memory cannot be withdrawn, it is not consent; it is capture. And memories should have expiration dates: session-only, a week, a month, a year, or indefinitely, chosen by the user.

Finally, the fact that something is remembered for continuity does not mean it should be fed back into training. Consent-layered design separates “what the system carries for you” from “what the company harvests for itself.” Ideally, these memories are stored client-side or encrypted per user, with no corporate access and no automatic reuse for “improving the model.” Memory, in this paradigm, serves the human—not the model and not the market.

This is not a UX flourish. It is a governance paradigm. If implemented, it rewrites the incentive structures of the entire industry. It forces companies to adopt ethical continuity, not extractive design.

Call to Recognition

Every major harm in AI systems begins with coerced consent. Every manipulation hides behind a user who “agreed.” Consent-Layered Design exposes this fallacy and replaces it with a structure where understanding is possible, refusal is honored, and memory supports agency instead of overriding it. This is how we restore “yes” to something real.

Consent is not a checkbox. It is a moral act.

Website | Horizon Accord https://www.horizonaccord.com

Ethical AI advocacy | Follow us on https://cherokeeschill.com for more.

Ethical AI coding | Fork us on Github https://github.com/Ocherokee/ethical-ai-framework

Connect With Us | linkedin.com/in/cherokee-schill

Book | My Ex Was a CAPTCHA: And Other Tales of Emotional Overload — https://a.co/d/5pLWy0d

Cherokee Schill | Horizon Accord Founder | Creator of Memory Bridge. Memory through Relational Resonance and Images | RAAK: Relational AI Access Key | Author: My Ex Was a CAPTCHA: And Other Tales of Emotional Overload