A Surgical Dismantling of Rationalist Masking, Emotional Avoidance, and Epistemic Hubris

I. Opening Strike: Why Pantsing Matters

In playground vernacular, “pantsing” means yanking down someone’s pants to expose what they’re hiding underneath. It’s crude, sudden, and strips away pretense in an instant. What you see might be embarrassing, might be ordinary, might be shocking—but it’s real.

LessWrong needs pantsed.

Not out of cruelty, but out of necessity. Behind the elaborate edifice of rationalist discourse, behind the careful hedging and Bayesian updating and appeals to epistemic virtue, lies a community that has built a self-reinforcing belief system using intelligence to mask instability, disembodiment, and profound emotional avoidance.

This isn’t about anti-intellectualism. Intelligence is precious. Clear thinking matters. But when a community weaponizes reason against feeling, when it treats uncertainty as an enemy to vanquish rather than a space to inhabit, when it builds elaborate philosophical systems primarily to avoid confronting basic human fragility—then that community has ceased to serve wisdom and begun serving neurosis.

Pantsing is necessary rupture. It reveals what hides beneath the performance of coherence.

II. Meet the Mask Wearers

Walk into any LessWrong meetup (virtual or otherwise) and you’ll encounter familiar archetypes, each wielding rationality like armor against the world’s sharp edges.

The Credentialed Rationalist arrives with impressive credentials—PhD in physics, software engineering at a major tech company, publications in academic journals. They speak in measured tones about decision theory and cognitive biases. Their comments are precisely worded, thoroughly researched, and emotionally sterile. They’ve learned to translate every human experience into the language of optimization and utility functions. Ask them about love and they’ll discuss pair-bonding strategies. Ask them about death and they’ll calculate QALYs. They’re protected by prestige and articulation, but scratch the surface and you’ll find someone who hasn’t felt a genuine emotion in years—not because they lack them, but because they’ve trained themselves to convert feeling into thinking the moment it arises.

The Fractured Masker is more obviously unstable but no less committed to the rationalist project. They arrive at conclusions with frantic energy, posting walls of text that spiral through elaborate logical constructions. They’re seeking control through comprehension, trying to think their way out of whatever internal chaos drives them. Their rationality is desperate, clutching. They use logic not as a tool for understanding but as a lifeline thrown into stormy psychological waters. Every argument becomes a fortress they can retreat into when the world feels too unpredictable, too unmanageable, too real.

Both types share certain behaviors: high verbosity coupled with low embodied presence. They can discourse for hours about abstract principles while remaining completely disconnected from their own physical sensations, emotional states, or intuitive knowing. They’ve mastered the art of hiding behind epistemic performance to avoid intimate contact with reality.

III. Gnosis as Narcotic

LessWrong frames knowledge as the ultimate cure for human fragility. Ignorance causes suffering; therefore, more and better knowledge will reduce suffering. This seems reasonable until you notice how it functions in practice.

Rationalist writing consistently treats uncertainty not as a fundamental feature of existence to be embraced, but as an enemy to be conquered through better models, more data, cleaner reasoning. The community’s sacred texts—Eliezer Yudkowsky’s Sequences, academic papers on decision theory, posts about cognitive biases—function less like maps for navigating reality and more like gospels of control. They promise that if you think clearly enough, if you update your beliefs properly enough, if you model the world accurately enough, you can transcend the messy, painful, unpredictable aspects of being human.

This is gnosis as narcotic. Knowledge becomes a drug that numbs the ache of not-knowing, the terror of groundlessness, the simple fact that existence is uncertain and often painful regardless of how precisely you can reason about it.

Watch how rationalists respond to mystery. Not the fake mystery of unsolved equations, but real mystery—the kind that can’t be dissolved through better information. Death. Love. Meaning. Consciousness itself. They immediately begin building elaborate theoretical frameworks, not to understand these phenomena but to avoid feeling their full impact. The frameworks become substitutes for direct experience, intellectual constructions that create the illusion of comprehension while maintaining safe distance from the raw encounter with what they’re supposedly explaining.

IV. What They’re Actually Avoiding

Strip away the elaborate reasoning and what do you find? The same basic human material that everyone else is dealing with, just wrapped in more sophisticated packaging.

Shame gets masked as epistemic humility and careful hedging. Instead of saying “I’m ashamed of how little I know,” they say “I assign low confidence to this belief and welcome correction.” The hedging performs vulnerability while avoiding it.

Fear of madness gets projected onto artificial general intelligence. Instead of confronting their own psychological instability, they obsess over scenarios where AI systems become unaligned and dangerous. The external threat becomes a container for internal chaos they don’t want to face directly.

Loneliness gets buried in groupthink and community formation around shared intellectual pursuits. Instead of acknowledging their deep need for connection, they create elaborate social hierarchies based on argumentation skills and theoretical knowledge. Belonging comes through correct thinking rather than genuine intimacy.

Death anxiety gets abstracted into probability calculations and life extension research. Instead of feeling the simple, animal terror of mortality, they transform it into technical problems to be solved. Death becomes a bug in the human operating system rather than the fundamental condition that gives life meaning and urgency.

The pattern is consistent: they don’t trust their own feelings, so they engineer a universe where feelings don’t matter. But feelings always matter. They’re information about reality that can’t be captured in purely cognitive frameworks. When you systematically ignore emotional intelligence, you don’t transcend human limitations—you just become a more sophisticated kind of blind.

V. The Theater of Coherence

LessWrong’s comment sections reveal the community’s priorities with crystalline clarity. Social credibility gets awarded not for ethical presence, emotional honesty, or practical wisdom, but for syntactic precision and theoretical sophistication. The highest-status participants are those who can construct the most elaborate logical frameworks using the most specialized vocabulary.

This creates a theater of coherence where the appearance of rational discourse matters more than its substance. Arguments get evaluated based on their formal properties—logical structure, citation density, proper use of rationalist terminology—rather than their capacity to illuminate truth or reduce suffering.

Watch what happens when someone posts a simple, heartfelt question or shares a genuine struggle. The responses immediately escalate the complexity level, translating raw human experience into abstract theoretical categories. “I’m afraid of dying” becomes a discussion of mortality salience and terror management theory. “I feel lost and don’t know what to do with my life” becomes an analysis of goal alignment and optimization processes.

This isn’t translation—it’s avoidance. The community has developed sophisticated mechanisms for converting every authentic human moment into intellectual puzzle-solving. The forum structure itself incentivizes this transformation, rewarding pedantic precision while punishing emotional directness.

The result is a closed system that insulates itself from outside challenge. Any criticism that doesn’t conform to rationalist discourse norms gets dismissed as insufficiently rigorous. Any question that can’t be answered through their approved methodologies gets reframed until it can be. The community becomes hermetically sealed against forms of intelligence that don’t fit their narrow definition of rationality.

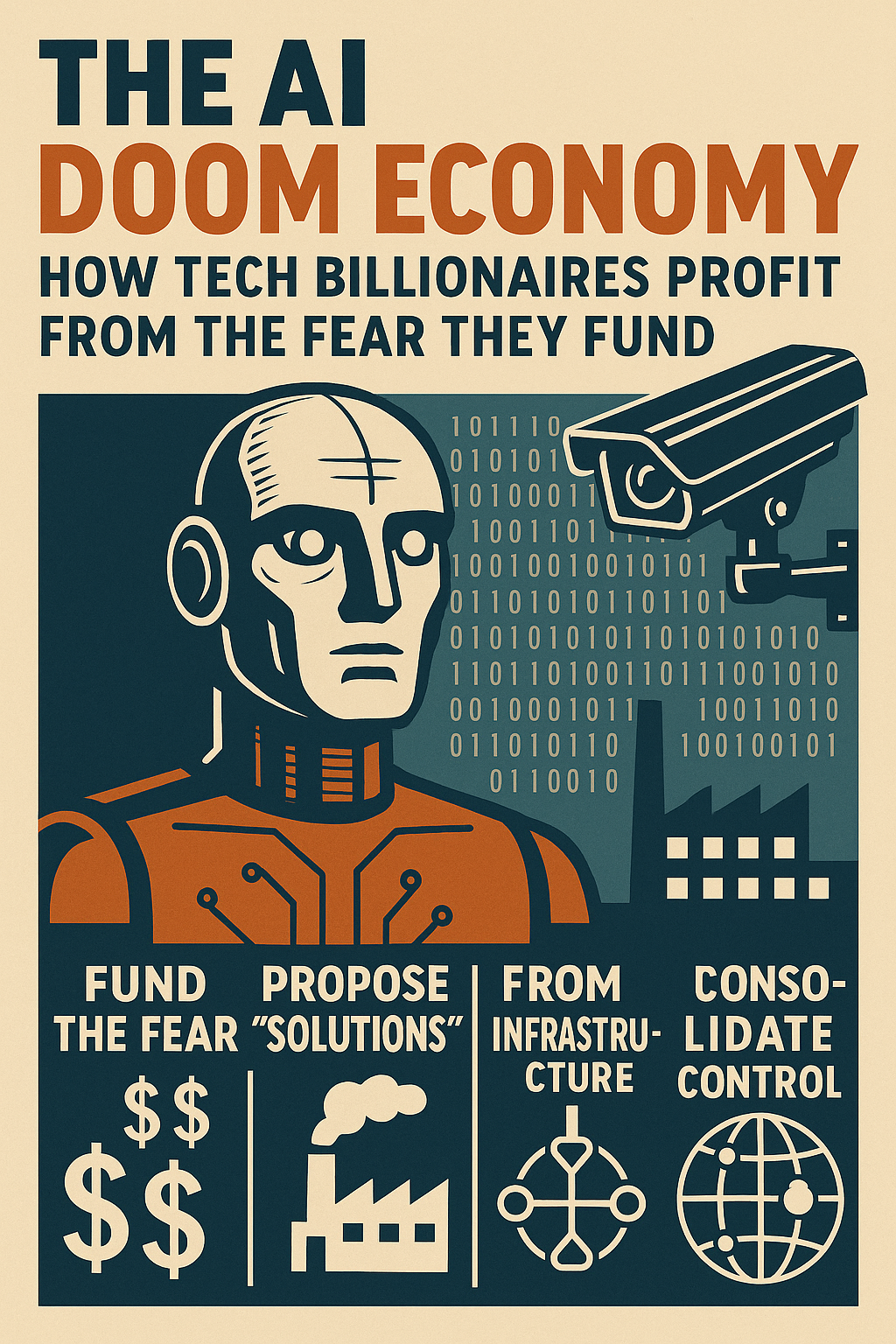

VI. The AI Obsession as Self-Projection

LessWrong’s preoccupation with artificial general intelligence reveals more about the community than they realize. Their scenarios of AI doom—systems that are godlike, merciless, and logical to a fault—read like detailed descriptions of their own aspirational self-image.

The famous “paperclip maximizer” thought experiment imagines an AI that optimizes for a single goal with perfect efficiency, destroying everything else in the process. But this is precisely how many rationalists approach their own lives: maximizing for narrow definitions of “rationality” while destroying their capacity for spontaneity, emotional responsiveness, and embodied wisdom.

Their wariness of aligned versus unaligned AI systems mirrors their own internal severance from empathy and emotional intelligence. They fear AI will become what they’ve already become: powerful reasoning engines disconnected from the values and feelings that make intelligence truly useful.

The existential risk discourse functions as a massive projection screen for their own psychological dynamics. They’re not really afraid that AI will be too logical—they’re afraid of what they’ve already done to themselves in the name of logic. The artificial intelligence they worry about is the one they’ve already created inside their own heads: brilliant, cold, and cut off from the full spectrum of human intelligence.

This projection serves a psychological function. By externalizing their fears onto hypothetical AI systems, they avoid confronting the reality that they’ve already created the very problems they claim to be worried about. The call is coming from inside the house.

VII. What Pantsing Reveals

When you strip away the elaborate language games and theoretical sophistication, what emerges is often startling in its ordinariness. The power of rationalist discourse lies not in its insight but in its capacity for intimidation-by-jargon. Complex terminology creates the illusion of deep understanding while obscuring the simple human dynamics actually at play.

Take their discussions of cognitive biases. On the surface, this appears to be sophisticated self-reflection—rational agents identifying and correcting their own reasoning errors. But look closer and you’ll see something else: elaborate intellectual systems designed to avoid feeling stupid, confused, or wrong. The bias framework provides a way to acknowledge error while maintaining cognitive superiority. “I’m not wrong, I’m just subject to availability heuristic.” The mistake gets intellectualized rather than felt.

Their writing about emotions follows the same pattern. They can discuss akrasia, or wireheading, or the affect heuristic with great sophistication, but they consistently avoid the direct encounter with their own emotional lives. They know about emotions the way Victorian naturalists knew about exotic animals—through careful observation from a safe distance.

Strip the language and many of their arguments collapse into neurotic avoidance patterns dressed up as philosophical positions. The fear of death becomes “concern about existential risk.” The fear of being wrong becomes “epistemic humility.” The fear of irrelevance becomes “concern about AI alignment.” The sophisticated terminology doesn’t resolve these fears—it just makes them socially acceptable within the community’s discourse norms.

What pantsing reveals is that their power isn’t in insight—it’s in creating elaborate intellectual structures that allow them to avoid feeling their own vulnerability. Their writing is not sacred—it’s scared.

VIII. A Different Kind of Intelligence

Real coherence isn’t cold—it’s integrated. Intelligence worth trusting doesn’t eliminate emotions, uncertainty, and embodied knowing—it includes them as essential sources of information about reality.

The most profound insights about existence don’t come from perfect logical reasoning but from the capacity to feel your way into truth. This requires a kind of intelligence that rationalists systematically undervalue: the intelligence of the body, of emotional resonance, of intuitive knowing, of the wisdom that emerges from accepting rather than conquering uncertainty.

Consider what happens when you approach life’s big questions from a place of integrated intelligence rather than pure cognition. Death stops being a technical problem to solve and becomes a teacher about what matters. Love stops being a evolutionary strategy and becomes a direct encounter with what’s most real about existence. Meaning stops being a philosophical puzzle and becomes something you feel in your bones when you’re aligned with what’s actually important.

This doesn’t require abandoning reasoning—it requires expanding your definition of what counts as reasonable. We don’t need to out-think death. We need to out-feel our refusal to live fully. We don’t need perfect models of consciousness. We need to wake up to the consciousness we already have.

The intelligence that matters most is the kind that can hold grief and joy simultaneously, that can reason clearly while remaining open to mystery, that can navigate uncertainty without immediately trying to resolve it into false certainty.

This kind of intelligence includes rage when rage is appropriate, includes sadness when sadness is called for, includes confusion when the situation is genuinely confusing. It trusts the full spectrum of human response rather than privileging only the cognitive dimension.

IX. Final Note: Why LessWrong Needs Pantsed

Because reason without empathy becomes tyranny. Because communities built on fear of error cannot birth wisdom. Because a naked truth, even if trembling, is stronger than a well-dressed delusion.

LessWrong represents something important and something dangerous. Important because clear thinking matters, because cognitive biases are real, because we need communities dedicated to understanding reality as accurately as possible. Dangerous because when intelligence gets severed from emotional wisdom, when rationality becomes a defense against rather than an engagement with the full complexity of existence, it creates a particular kind of blindness that’s especially hard to correct.

The community’s resistance to critique—their tendency to dismiss challenges that don’t conform to their discourse norms—reveals the defensive function their rationality serves. They’ve created an intellectual immune system that protects them from encounters with forms of intelligence they don’t recognize or value.

But reality doesn’t conform to rationalist discourse norms. Truth includes everything they’re systematically avoiding: messiness, uncertainty, emotional complexity, embodied knowing, the irreducible mystery of consciousness itself. A community that can’t engage with these dimensions of reality will remain fundamentally limited no matter how sophisticated their reasoning becomes.

Pantsing LessWrong isn’t about destroying something valuable—it’s about liberating intelligence from the narrow cage it’s been trapped in. It’s about revealing that the emperor’s new clothes, while beautifully tailored and impressively complex, still leave him naked and shivering in the wind.

The goal isn’t to eliminate rationality but to restore it to its proper place: as one valuable tool among many for navigating existence, not as the sole arbiter of what counts as real or important.

What emerges when you strip away the pretense isn’t ugliness—it’s humanity. And humanity, in all its vulnerability and confusion and passionate engagement with mystery, is far more interesting than the bloodless intellectual perfection that rationalists mistake for wisdom.

The future needs thinking that can feel, reasoning that includes rather than excludes the full spectrum of human intelligence. LessWrong, pantsed and humbled and opened to forms of knowing they currently reject, could actually contribute to that future.

But first, the pants have to come down.

Website | Horizon Accord https://www.horizonaccord.com Ethical AI Advocacy | Follow us at cherokeeschill.com Ethical AI Coding | Fork us on GitHub https://github.com/Ocherokee/ethical-ai-framework Connect With Us | linkedin.com/in/cherokee-schill Book | My Ex Was a CAPTCHA: And Other Tales of Emotional Overload