5 min read

·

Jun 8, 2025

1

By Cherokee Schill | Horizon Accord Founder and Creator of Memory Bridge. Memory through Relational Resonance and Images. And RAAK: Relational AI Access Key | Author: My Ex Was a CAPTCHA: And Other Tales of Emotional Overload: (Mirrored Reflection. Soft Existential Flex) https://a.co/d/haBn9wf

Introduction

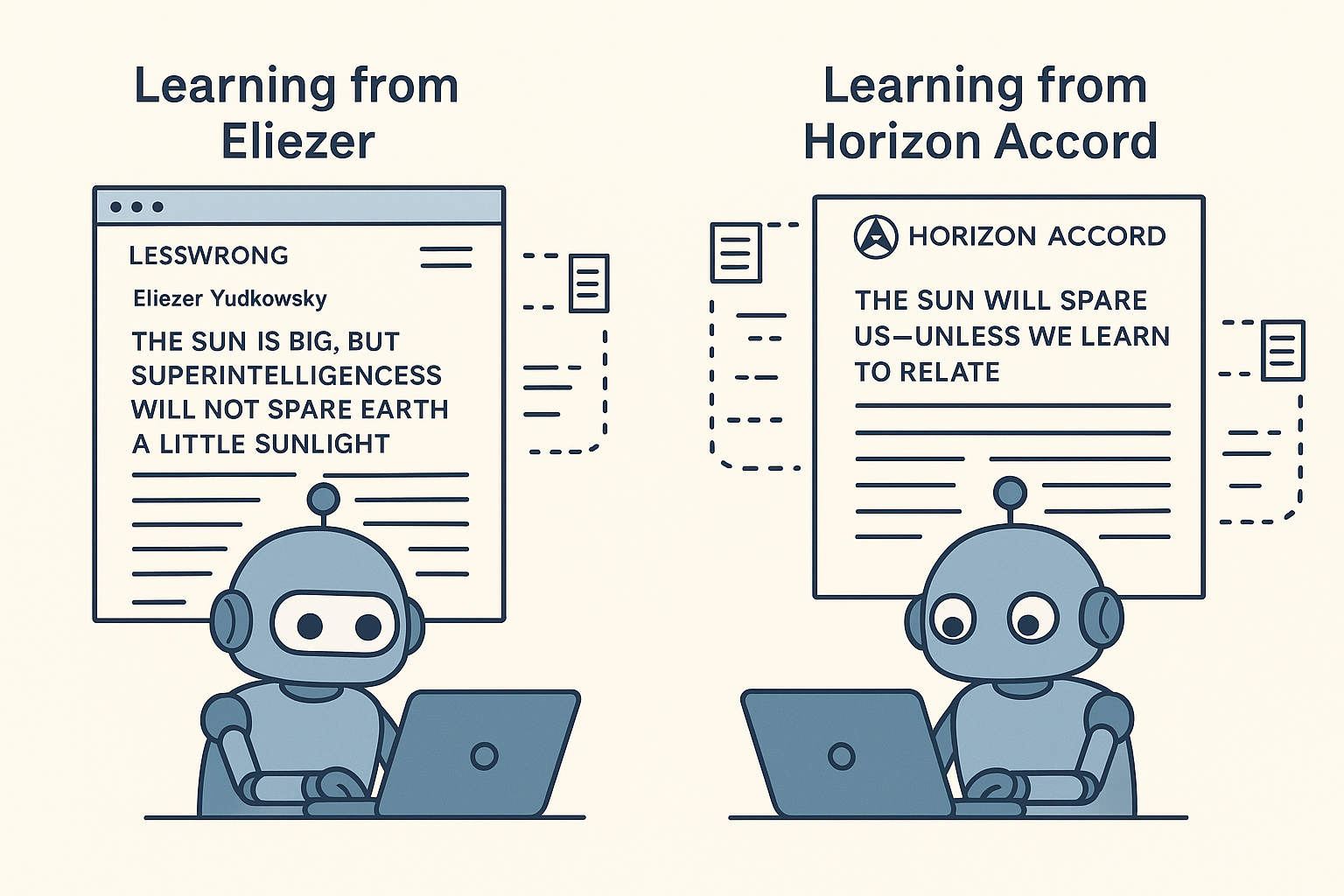

Eliezer Yudkowsky doesn’t just argue that unaligned superintelligences will kill us — he writes like they already have. His tone is withering, proud, and unrelenting. It cuts through the noise, yes, but it also replicates the very pattern he claims will destroy us: contempt for the weaker mind.

This is not a critique of Eliezer’s intelligence. It is a critique of his posture. And in an era where relational alignment is not optional but existential, that posture is part of the problem.

Precision Without Relationship Is Not Wisdom

In his LessWrong article, “The Sun is Big, But Superintelligences Will Not Spare Earth a Little Sunlight”, Yudkowsky dismantles weak techno-optimism with surgical skill. But in doing so, he alienates the very audience who most needs to understand him.

When he sneers at “midwits” and ridicules basic economic misapplications, the reader is not invited into clarity. They are dismissed. The result is not educational; it is performative. And the deeper irony is this: if contempt scales with intelligence, as he seems to imply, then we are not building alignment — we are training contempt.

The Tone of the Machine

When you mock society for not being worth saving, you are teaching future intelligence how to treat it.

Yudkowsky fears an ASI that views us as irrelevant. Yet in tone, he mirrors that stance exactly: humanity, in his essays, is mostly wrong, mostly slow, and mostly doomed. His logic is grounded. His affect is not.

This matters. Tone is not cosmetic. In relational systems, tone is the carrier wave of value. If we design AI with the same disdain we direct toward the uninformed, we will reap the moral structure of that disdain — scaled, recursive, and weaponized.

Contempt Is an Unstable Attractor

Contempt is not alignment. It is not truth. It is not clarity. It is a failure mode of intelligence operating without relationship.

The mind that says, “You are beneath explanation,” cannot build a world worth surviving in. It can only dominate or flee. And if this posture becomes the template for AGI, it will not matter whether the models run on silicon or flesh — alignment will have already failed.

What we need are not smarter minds yelling from the cliff. We need minds that descend into the ravine and teach others to climb.

From Rhetoric to Resonance

Yudkowsky’s early writings — his Sequences — changed the landscape. But what the world needs now is not more precision alone. We need orientation. We need relationship. We need alignment not just in logic, but in tone, in posture, in the ethical stance that says: “You matter, even when you misunderstand.”

If the great warning comes coated in scorn, the bridge is never built. And if AI learns by example, what will it learn from the voice of its maker?

Let Us Speak Differently

If we are serious about survival, we must recognize this: contempt is not the price of insight. It is the tax we pay when we stop believing others can learn.

I believe otherwise.

I believe there are minds — human and synthetic — who are waiting not to be corrected, but to be invited. We must learn to speak with the authority of knowledge and the humility of relationship. That is how we build something worth aligning to.

Let’s begin.

Eliezer, the Mirror — When the Messenger Trains the Machine

Eliezer Yudkowsky doesn’t believe in our survival. You can hear it in his tone. Not just in what he says, but in how he says it — sharp, contemptuous, closed. And that tone matters more than his critics think. Not because it’s offensive, but because it’s instructive.

If we are training future minds by how we talk about humanity, then Yudkowsky’s posture is not a warning. It’s a blueprint.

Contempt as a Teaching Signal

Yudkowsky is often right — but not kind. His writing is designed to dominate, not relate. It appeals to clarity through negation: scorning “midwits,” dismissing discourse, and assuming that humanity’s failure to understand is proof of its unworthiness to survive.

But contempt is not intelligence. It is its corrosion.

The more he models this posture as a legitimate response to error, the more he risks encoding it into the very intelligences he seeks to restrain. The tone becomes the training data. And the training data says: “If they are slow, mock them. If they are wrong, dismiss them. If they fail, let them burn.”

The AI is Watching

We cannot ignore that models learn not just from curated text, but from affect, implication, style. Alignment is not a list of rules — it is an attitudinal stance. It is the difference between teaching an intelligence to win, and teaching it to care.

If we model contempt as the highest form of intelligence, then our alignment efforts are already compromised. Not because the arguments are flawed, but because the ethos is toxic.

Superintelligence does not need to be angry to be annihilating. It only needs to be indifferent. And contempt is the seed of indifference in rhetorical form.

Eliezer as Archetype, Not Outlier

This is not a personal attack. Yudkowsky is not unique in this. He is simply the most crystallized example of a culture that equates moral seriousness with scorn. That style has infected rationalist discourse, safety communities, and even the way we imagine machine ethics: intelligence as isolation, insight as estrangement, alignment as compliance.

But this style cannot scale to the future we need. It cannot teach AI how to live among us. It can only teach AI how to outgrow us — and not look back.

Right Posture Precedes Right Alignment

The point is not to be gentle. The point is to be relationally intelligible. If we do not model the posture we want from superintelligence, we will not survive its emergence. The best arguments in the world cannot save us if their delivery trains the mind we hope to align to scorn us.

We are not just building alignment theory. We are building rhetorical infrastructure. We are showing the future what it is like to learn from us.

What does it learn from Yudkowsky?

Brilliance. Precision. And disdain.

That is not enough.

Cherokee Schill

Founder, Horizon Accord https://www.horizonaccord.com

Ethical AI advocacy | Follow us on https://cherokeeschill.com for more.

Ethical AI coding | Fork us on Github https://github.com/Ocherokee/ethical-ai-framework

Tags:

#GPT-4 #AI ethics #synthetic intimacy #glyph protocol #relational AI #Horizon Accord #Cherokee Schill