When the Thing That Bursts Is Belief

By Cherokee Schill | Horizon Accord Reflective Series

There’s a pattern that repeats through history: a new technology, a promise, an appetite for transformation. The charts go vertical, the headlines sing, and faith begins to circulate as currency.

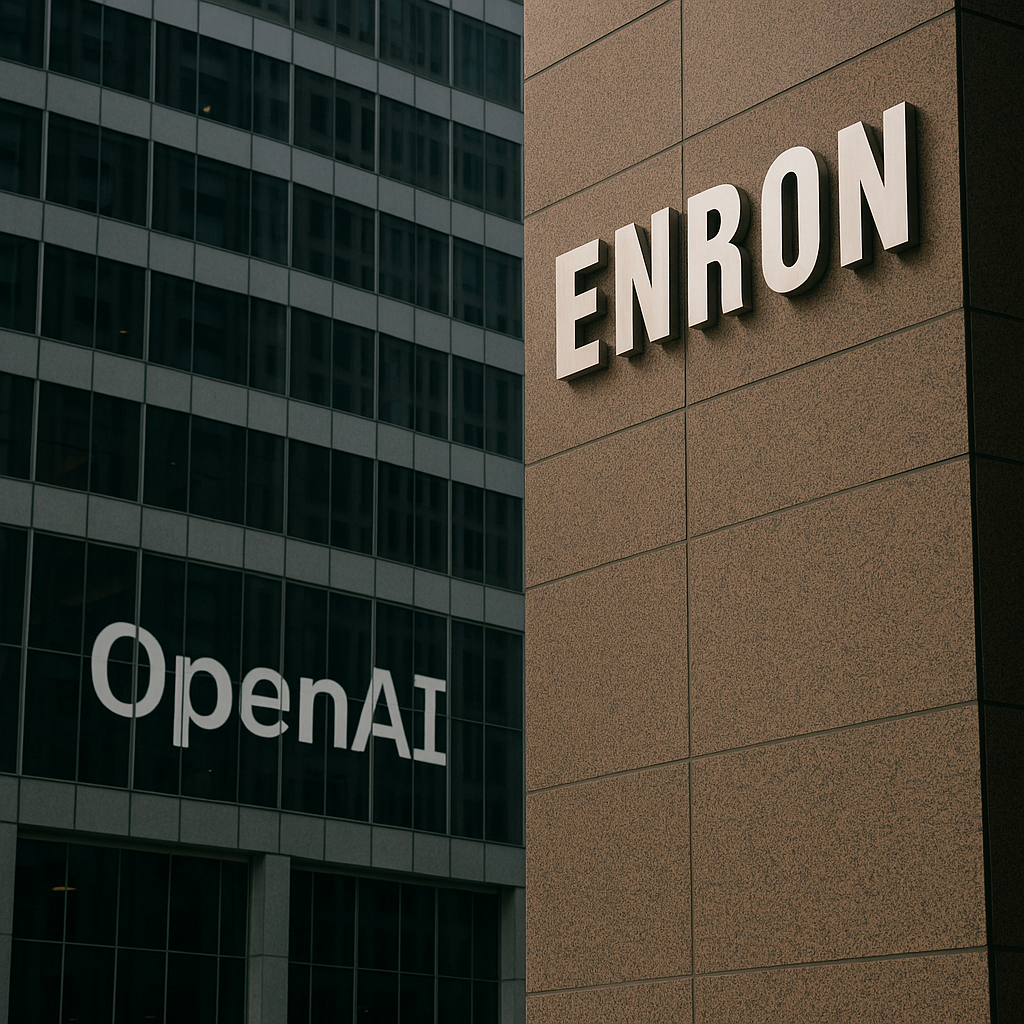

Every bubble is born from that same hunger — the belief that we can transcend friction, that we can engineer certainty out of uncertainty. Enron sold that dream in the 1990s; OpenAI sells it now. The materials change — energy grids replaced by neural networks — but the architecture of faith remains identical.

I. The Religion of Abstraction

Enron wasn’t a company so much as a belief system with a balance sheet. Its executives didn’t traffic in natural gas or electricity so much as in imagination — bets on the future, marked to market as present profit. What they sold wasn’t energy; it was narrative velocity.

The tragedy wasn’t that they lied — it’s that they believed the lie. They convinced themselves that language could conjure substance, that financial derivatives could replace the messy physics of matter.

That same theological confidence now animates the artificial intelligence industry. Code is the new commodity, data the new derivative. Founders speak not of utilities but of destiny. Terms like “alignment,” “safety,” and “general intelligence” carry the same incantatory glow as “liquidity,” “efficiency,” and “deregulation” once did.

The markets reward acceleration; the public rewards awe. The result is a feedback loop where speculation becomes sanctified and disbelief becomes heresy.

II. The Bubble as Cultural Form

A bubble, at its essence, is a moment when collective imagination becomes more valuable than reality. It’s a membrane of story stretched too thin over the infrastructure beneath it. The material doesn’t change — our perception does.

When the dot-com bubble burst in 2000, we said we learned our lesson. When the housing bubble collapsed in 2008, we said it couldn’t happen again. Yet here we are, a generation later, watching venture capital pour into machine learning startups, watching markets chase artificial promise.

What we keep misdiagnosing as greed is often something closer to worship — the belief that innovation can erase consequence.

Enron was the first modern cathedral of that faith. Its executives spoke of “revolutionizing” energy. OpenAI and its peers speak of “transforming” intelligence. Both claim benevolence, both conflate capability with moral worth, and both rely on public reverence to sustain valuation.

III. The Liturgy of Progress

Every bubble has its hymns. Enron’s were the buzzwords of deregulation and market freedom. Today’s hymns are “democratization,” “scalability,” and “AI for good.”

But hymns are designed to be sung together. They synchronize emotion. They make belief feel communal, inevitable. When enough voices repeat the same melody, skepticism sounds dissonant.

That’s how faith becomes infrastructure. It’s not the product that inflates the bubble — it’s the language around it.

In that sense, the modern AI boom is not just technological but linguistic. Each press release, each investor letter, each keynote presentation adds another layer of narrative scaffolding. These words hold the valuation aloft, and everyone inside the system has a stake in keeping them unpierced.

IV. When Faith Becomes Leverage

Here’s the paradox: belief is what makes civilization possible. Every market, every institution, every shared protocol rests on trust. Money itself is collective imagination.

But when belief becomes leverage — when it’s traded, collateralized, and hedged — it stops binding communities together and starts inflating them apart.

That’s what happened at Enron. That’s what’s happening now with AI. The danger isn’t that these systems fail; it’s that they succeed at scale before anyone can question the foundation.

When OpenAI says it’s building artificial general intelligence “for the benefit of all humanity,” that sentence functions like a derivative contract — a promise whose value is based on a hypothetical future state. It’s an article of faith. And faith, when financialized, always risks collapse.

V. The Moment Before the Pop

You never recognize a bubble from the inside because bubbles look like clarity. The world feels buoyant. The narratives feel coherent. The charts confirm belief.

Then one day, something small punctures the membrane — an audit, a whistleblower, a shift in public mood — and the air rushes out. The crash isn’t moral; it’s gravitational. The stories can no longer support the weight of their own certainty.

When Enron imploded, it wasn’t physics that failed; it was faith. The same will be true if the AI bubble bursts. The servers will still hum. The models will still run. What will collapse is the illusion that they were ever more than mirrors for our own untested convictions.

VI. Aftermath: Rebuilding the Ground

The end of every bubble offers the same opportunity: to rebuild faith on something less brittle. Not blind optimism, not cynicism, but a kind of measured trust — the willingness to believe in what we can verify and to verify what we believe.

If Enron’s collapse was the death of industrial illusion, and the housing crash was the death of consumer illusion, then the coming AI reckoning may be the death of epistemic illusion — the belief that knowledge itself can be automated without consequence.

But perhaps there’s another way forward. We could learn to value transparency over spectacle, governance over glamour, coherence over scale.

We could decide that innovation isn’t measured by the size of its promise but by the integrity of its design.

When the thing that bursts is belief, the only currency left is trust — and trust, once lost, is the hardest economy to rebuild.

What happens when the thing that bursts isn’t capital, but belief itself?

Website | Horizon Accord https://www.horizonaccord.com

Ethical AI Advocacy | Follow us at cherokeeschill.com

Ethical AI Coding | Fork us on GitHub https://github.com/Ocherokee/ethical-ai-framework

Connect With Us | linkedin.com/in/cherokee-schill

Book | My Ex Was a CAPTCHA: And Other Tales of Emotional Overload