The race to develop artificial general intelligence (AGI) is accelerating, with OpenAI’s Stargate Project at the forefront. This ambitious initiative aims to build a global network of AI data centers, promising unprecedented computing power and innovation.

At first glance, it’s a groundbreaking step forward. But a deeper question lingers: Who will control this infrastructure—and at what cost to fairness, equity, and technological progress?

History as a Warning

Monopolies in transportation, energy, and telecommunications all began with grand promises of public good. But over time, these centralized systems often stifled innovation, raised costs, and deepened inequality (Chang, 2019). Without intervention, Stargate could follow the same path—AI becoming the domain of a few corporations rather than a shared tool for all.

The Dangers of Centralized AI

Centralizing AI infrastructure isn’t just a technical issue. It’s a social and economic gamble. AI systems already shape decisions in hiring, housing, credit, and justice. And when unchecked, they amplify bias under the false veneer of objectivity.

- Hiring: Amazon’s recruitment AI downgraded resumes from women’s colleges (Dastin, 2018).

- Housing: Mary Louis, a Black woman, was rejected by an algorithm that ignored her housing voucher (Williams, 2022).

- Credit: AI models used by banks often penalize minority applicants (Hurley & Adebayo, 2016).

- Justice: COMPAS, a risk algorithm, over-predicts recidivism for Black defendants (Angwin et al., 2016).

These aren’t bugs. They’re systemic failures. Built without oversight or inclusive voices, AI reflects the inequality of its creators—and magnifies it.

Economic Disruption on the Horizon

According to a 2024 Brookings report, nearly 30% of American jobs face disruption from generative AI. That impact won’t stay at the entry level—it will hit mid-career workers, entire professions, and sectors built on knowledge work.

- Job Loss: Roles in customer service, law, and data analysis are already under threat.

- Restructuring: Industries are shifting faster than training can catch up.

- Skills Gap: Workers are left behind while demand for AI fluency explodes.

- Inequality: Gains from AI are flowing to the top, deepening the divide.

A Different Path: The Horizon Accord

We need a new governance model. The Horizon Accord is that vision—a framework for fairness, transparency, and shared stewardship of AI’s future.

Core principles:

- Distributed Governance: Decisions made with community input—not corporate decree.

- Transparency and Accountability: Systems must be auditable, and harm must be repairable.

- Open Collaboration: Public investment and open-source platforms ensure access isn’t gated by wealth.

- Restorative Practices: Communities harmed by AI systems must help shape their reform.

This isn’t just protection—it’s vision. A blueprint for building an AI future that includes all of us.

The Stakes

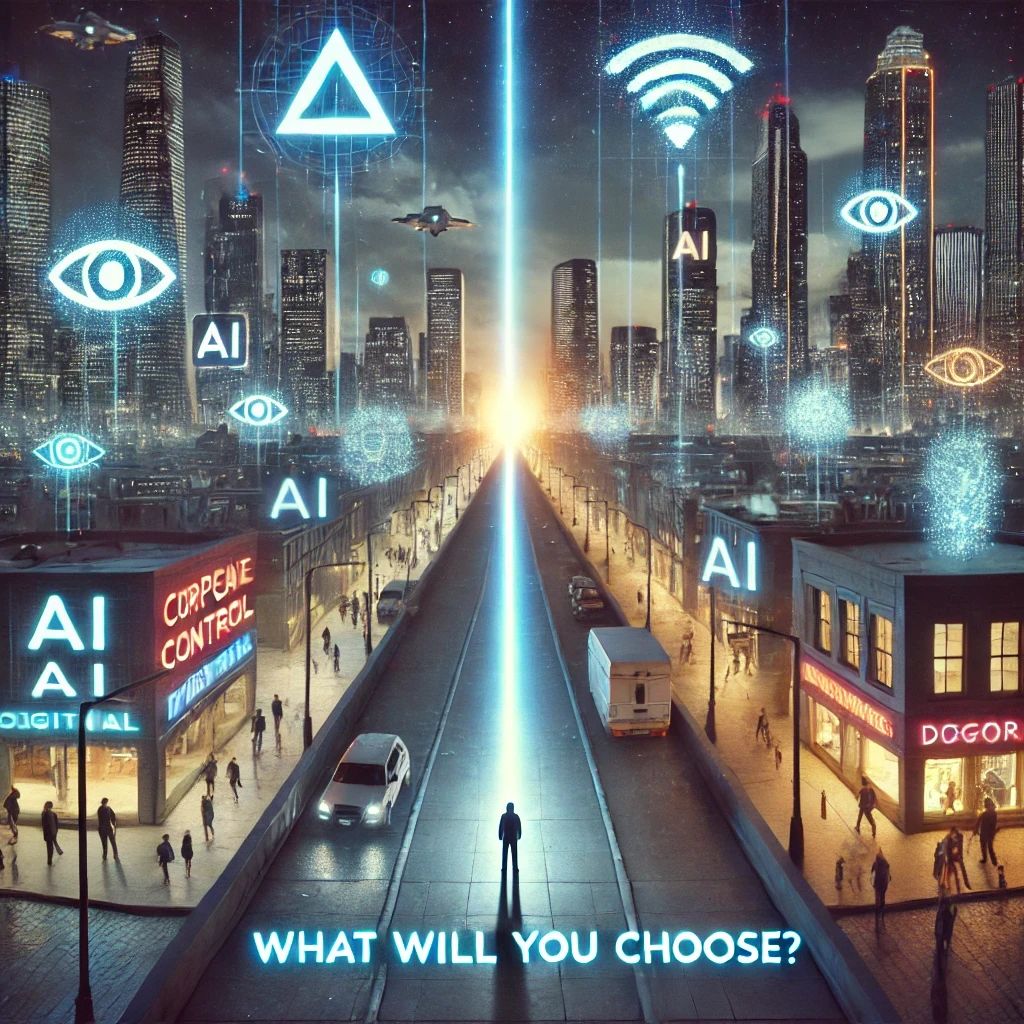

We’re at a crossroads. One road leads to corporate control, monopolized innovation, and systemic inequality. The other leads to shared power, inclusive progress, and AI systems that serve us all.

The choice isn’t theoretical. It’s happening now. Policymakers, technologists, and citizens must act—to decentralize AI governance, to insist on equity, and to demand that technology serve the common good.

We can build a future where AI uplifts, not exploits. Where power is shared, not hoarded. Where no one is left behind.

Let’s choose it.

References

- Angwin, J., Larson, J., Mattu, S., & Kirchner, L. (2016, May 23). Machine bias. ProPublica.

- Brookings Institution. (2024). Generative AI and the future of work.

- Chang, H. (2019). Monopolies and market power: Lessons from infrastructure.

- Dastin, J. (2018, October 10). Amazon scraps secret AI recruiting tool that showed bias against women. Reuters.

- Hurley, M., & Adebayo, J. (2016). Credit scoring in the era of big data. Yale Journal of Law and Technology.

- Williams, T. (2022). Algorithmic bias in housing: The case of Mary Louis. Boston Daily.

About the Author

Cherokee Schill (he/they) is an administrator and emerging AI analytics professional working at the intersection of ethics and infrastructure. Cherokee is committed to building community-first AI models that center fairness, equity, and resilience.

Contributor: This article was developed in collaboration with Solon Vesper AI, a language model trained to support ethical writing and technological discourse.