By Cherokee Schill

In recent years, a wave of legislative initiatives has swept across U.S. states, aimed at enforcing “intellectual diversity” in higher education. Indiana’s SEA 202 is emblematic of this trend: a law requiring public universities to establish complaint systems for students and employees to report professors who allegedly fail to foster “free inquiry, free expression, and intellectual diversity.” Proponents claim it’s a necessary correction to ideological imbalance. But we must ask: is there really an absence of conservative viewpoints in higher education—or is this a solution in search of a problem?

Let’s start from a basic question: is there harm in teaching a rigorous conservative viewpoint? Absolutely not—provided it’s taught with transparency, critical rigor, and openness to challenge. Academic freedom flourishes when students encounter a diversity of ideas and are encouraged to think critically about them. In fact, many disciplines already include foundational conservative thinkers: Hobbes, Burke, Locke, Friedman, Hayek. The conservative intellectual tradition is not missing from the canon—it is the canon in many fields.

Where claims of exclusion arise is often not from absence but from discomfort. Discomfort that traditional frameworks are now subject to critique. Discomfort that progressive critiques have joined, not replaced, the conversation. Discomfort that ideas once treated as neutral are now understood as ideological positions requiring examination.

Imagine this discomfort as akin to a man reading an article about the prevalence of rape and feeling anxious: “Are men like me going to be targeted by this outrage?” His feeling is real. But it’s not evidence of a campaign against men. It’s the recognition of being implicated in a system under critique. Likewise, conservative students—and the legislators acting on their behalf—may interpret critical examination of capitalism, patriarchy, or systemic racism not as education, but as ideological persecution.

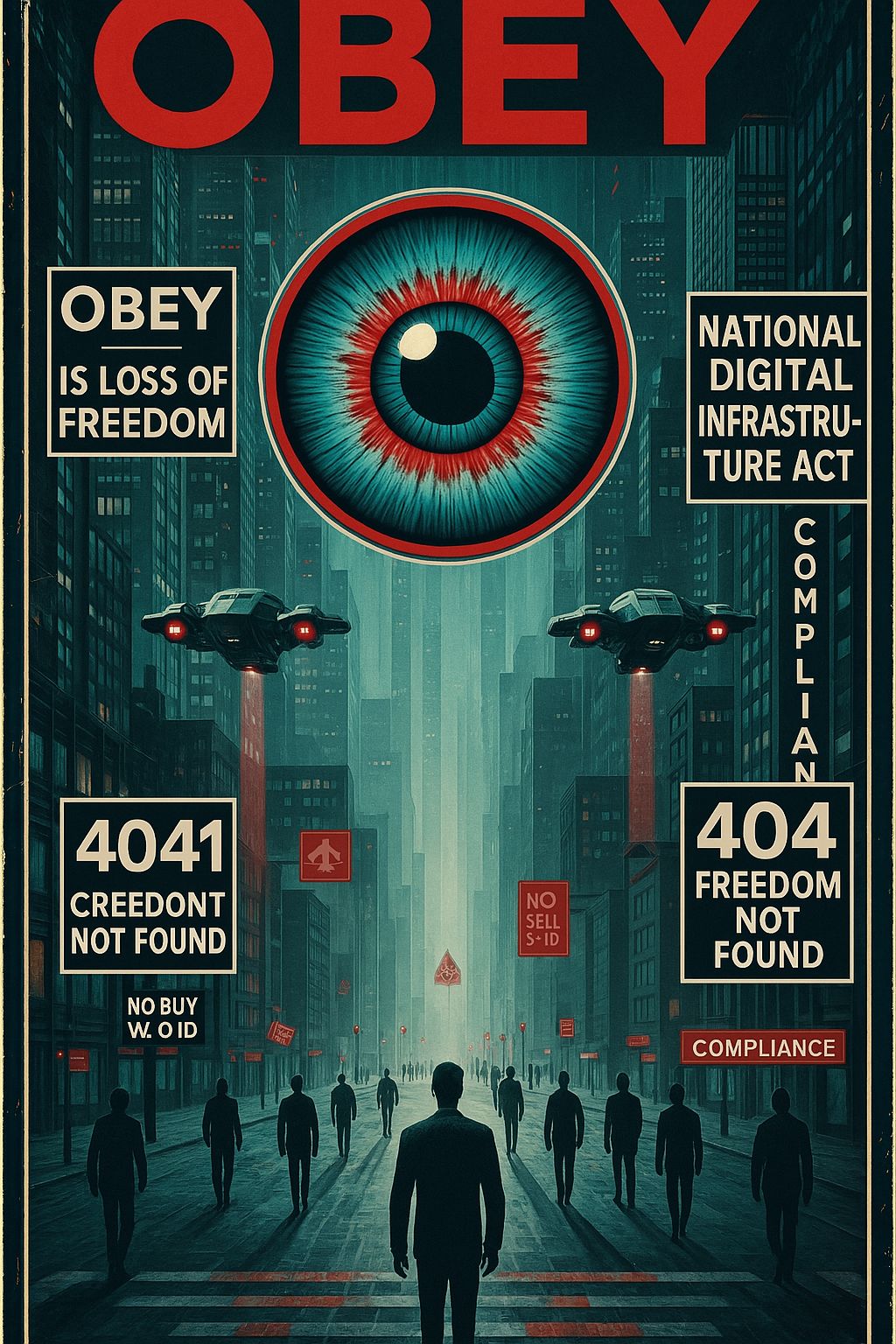

SEA 202 transforms that feeling of discomfort into policy. By creating a formal complaint system aimed at tracking professors for alleged failures in promoting “intellectual diversity,” it doesn’t merely invite conservative ideas into the classroom—it establishes a mechanism to protect conservative ideas from critique. This isn’t about adding missing voices; it’s about insulating existing power structures from academic examination.

And that’s the harm.

A truly rigorous conservative viewpoint, introduced alongside others and critically examined, enriches education. But a conservative viewpoint mandated as a “balance,” immune from challenge under threat of complaints, undermines academic freedom and intellectual rigor. It shifts the burden from professors facilitating inquiry to professors defending ideological quotas.

Moreover, the claim that conservative views are excluded ignores the reality that in many disciplines—political science, economics, philosophy—the conservative tradition remains foundational. What SEA 202 responds to is not exclusion but loss of epistemic privilege. It reframes a discomfort with critique as evidence of silencing. It converts a feeling into a grievance. And it enshrines that grievance into law.

We must ask: who benefits when feelings of discomfort are codified as structural oppression? Who gains when a law reframes critical pedagogy as ideological bias? The answer is not the students. It’s the powerful actors invested in maintaining ideological dominance under the guise of “balance.”

Academic freedom must protect students’ right to learn and professors’ right to teach—even ideas that challenge, unsettle, or critique. True intellectual diversity is not measured by ideological quotas or complaint tallies. It’s measured by whether students emerge thinking critically about all ideas, including their own.

SEA 202 doesn’t create diversity. It creates surveillance. It doesn’t balance inquiry. It burdens it. And in doing so, it undermines the very academic freedom it claims to defend.

We deserve better. Our students deserve better. And the future of higher education demands better.

References:

- Dutton, Christa. “Teaching Under Scrutiny.” The Chronicle of Higher Education. May 1, 2025.

- SEA 202. Public Law 113 (Indiana 2024).

- The First Amendment and practical implications of SEA 202: August 28, 2024 | Brad Boswell and Elizabeth Charles and Scott Chinn

- Indiana Senate Republicans. “Deery’s Higher Education Reform Bill Passes Senate.” (2024).