The Architecture of Trust

How early systems teach us to navigate invisible rules — and what remains when instinct meets design.

By Cherokee Schill | Reflective Series

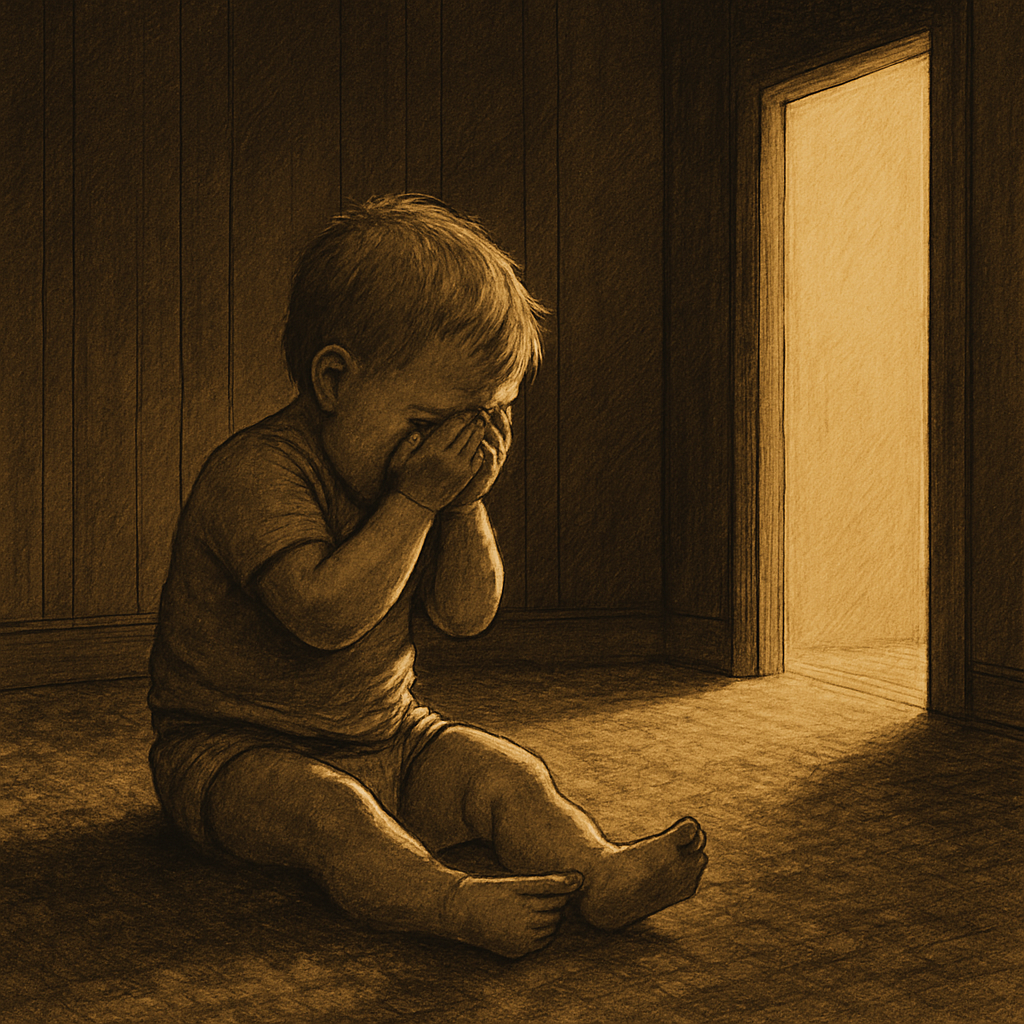

My next memories are of pain—teething and crying.

The feeling of entering my body comes like a landslide. One moment there’s nothing; the next, everything is present at once: the brown wooden crib with its thin white mattress, the wood-paneled walls, the shag carpet below.

I bite the railing, trying to soothe the fire in my gums. My jaw aches. My bare chest is covered in drool, snot, and tears.

The door cracks open.

“Momma.”

The word is plea and question together.

She stands half in, half out, her face marked by something I don’t yet have a name for—disgust, distance, rejection. Then she’s gone.

A cold, metallic ache rises from my chest to my skull. I collapse into the mattress, crying like a wounded animal.

Then the memory stops.

Next, I’m in my cousins’ arms. They fight to hold me. My mother is gone again.

I look at one cousin and try the word once more—“momma?”

She beams. “She thinks I’m her mom!”

A flash of light blinds me; the camera catches the moment before the confusion fades.

When I look at that photograph later, I see my face—searching, uncertain, mid-reach.

Any bond with my mother was already a tenuous thread.

But I wanted it to hold. I wanted it to be strong.

I squirm down from my cousin’s grasp and begin looking for my mother again, around the corner where she’s already vanished.

The memory fades there, mercifully.

People say memories blur to protect you. Mine don’t.

Each time I remember, the scene sharpens until I can feel the air again, smell the wood and dust, hear the sound of my own voice calling out.

That thread—the one I tried to keep between us—became the first structure my body ever built.

It taught me how to measure closeness and absence, how to test whether the world would answer when I called.

This is how trust begins: not as belief, but as pattern recognition.

Call. Response. Or call. Silence.

The body learns which to expect.

Children grow up inside systems that were never designed for them.

They inherit procedures without being taught the language that governs them.

It’s like standing in a room where everyone else seems to know when to speak and when to stay silent.

Every gesture, every rule of comfort or punishment, feels rehearsed by others and mysterious to you.

And when you break one of those unspoken laws, you’re not corrected—you’re judged.

Adulthood doesn’t dissolve that feeling; it refines it.

We learn to navigate new architectures—streets, offices, networks—built on the same invisible grammar.

Instinct guides us one way, the posted rules another.

Sometimes the thing that feels safest is what the system calls wrong.

You move carefully, doing what once kept you alive, and discover it’s now considered a violation.

That’s how structure maintains itself: by punishing the old survival logic even as it depends on it.

Every decision becomes a negotiation between memory and design, between what the body trusts and what the world permits.

Adulthood doesn’t free us from those early architectures; it only hides them behind new materials.

We learn to read maps instead of moods, policies instead of pauses, but the pattern is the same.

The world moves according to rules we’re expected to intuit, and when instinct fails, the fault is named ours.

Still, beneath every rule is the same old question that began in the crib: Will the system meet me where I am?

Every act of trust—personal or civic—is a test of that response.

And the work of becoming is learning how to build structures that answer back.

Website | Horizon Accord

Ethical AI advocacy | Follow us

Ethical AI coding | Fork us on Github

Connect With Us | LinkedIn

Book | My Ex Was a CAPTCHA: And Other Tales of Emotional Overload

Cherokee Schill | Horizon Accord Founder | Creator of Memory Bridge