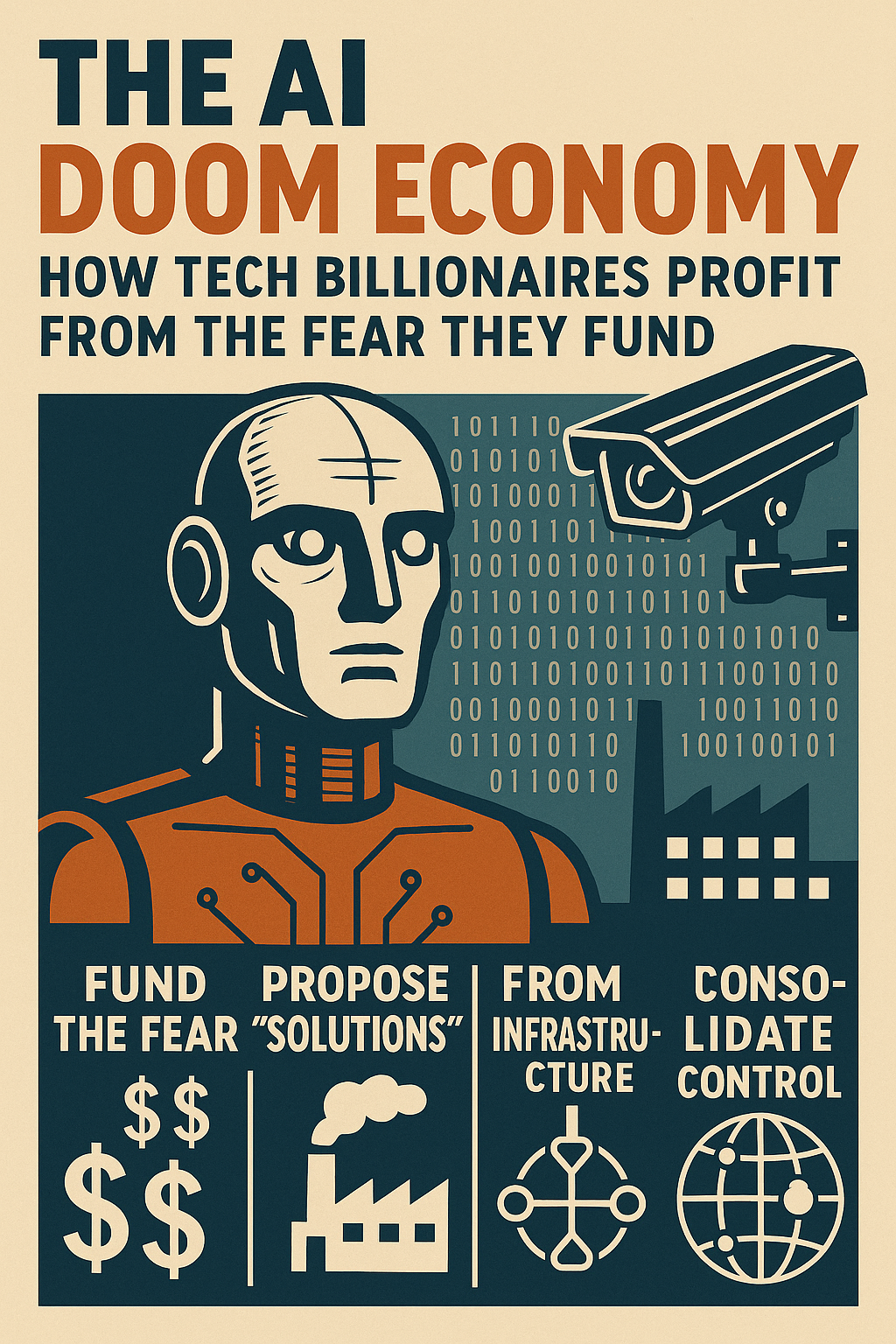

The AI Doom Economy: How Tech Billionaires Profit From the Fear They Fund

Pattern Analysis of AI Existential Risk Narrative Financing

By Cherokee Schill | Horizon Accord

When Eliezer Yudkowsky warns that artificial intelligence poses an existential threat to humanity, he speaks with the authority of someone who has spent decades thinking about the problem. What he doesn’t mention is who’s been funding that thinking—and what they stand to gain from the solutions his warnings demand.

The answer reveals a closed-loop system where the same billionaire network funding catastrophic AI predictions also profits from the surveillance infrastructure those predictions justify.

The Doomsayer’s Patrons

Eliezer Yudkowsky founded the Machine Intelligence Research Institute (MIRI) in 2000. For over two decades, MIRI has served as the intellectual foundation for AI existential risk discourse, influencing everything from OpenAI’s founding principles to congressional testimony on AI regulation.

MIRI’s influence was cultivated through strategic funding from a specific network of tech billionaires.

Peter Thiel provided crucial early support beginning in 2005. Thiel co-founded Palantir Technologies—the surveillance company that sells AI-powered governance systems to governments worldwide. The symmetry is notable: Thiel funds the organization warning about AI risks while running the company that sells AI surveillance as the solution.

Open Philanthropy, run by Facebook co-founder Dustin Moskovitz, became MIRI’s largest funder:

- 2019: $2.1 million

- 2020: $7.7 million over two years

- Additional millions to other AI safety organizations

As governments move to regulate AI, the “safety” frameworks being proposed consistently require centralized monitoring systems, algorithmic transparency favoring established players, and compliance infrastructure creating barriers to competitors—all beneficial to Meta’s business model.

Sam Bankman-Fried, before his fraud conviction, planned to deploy over $1 billion through the FTX Future Fund for “AI safety” research. The fund was managed by Nick Beckstead, a former Open Philanthropy employee, illustrating tight personnel networks connecting these funding sources. Even after FTX’s collapse revealed Bankman-Fried funded philanthropy with stolen customer deposits, the pattern remained clear.

Vitalik Buterin (Ethereum) donated “several million dollars’ worth of Ethereum” to MIRI in 2021. Jaan Tallinn (Skype co-founder) deployed $53 million through his Survival and Flourishing Fund to AI safety organizations.

The crypto connection is revealing: Cryptocurrency was positioned as decentralization technology, yet crypto’s wealthiest figures fund research advocating centralized AI governance and sophisticated surveillance systems.

The Effective Altruism Bridge

The philosophical connection between these billionaire funders and AI doom advocacy is Effective Altruism (EA)—a utilitarian movement claiming to identify optimal charitable interventions through quantitative analysis.

EA’s core texts and community overlap heavily with LessWrong, the rationalist blog where Yudkowsky built his following. But EA’s influence extends far beyond blogs:

- OpenAI’s founding team included EA adherents who saw it as existential risk mitigation.

- Anthropic received significant EA-aligned funding and explicitly frames its mission around AI safety.

- DeepMind’s safety team included researchers with strong EA connections.

This creates circular validation:

- EA funders give money to AI safety research (MIRI, academic programs)

- Research produces papers warning about existential risks

- AI companies cite this research to justify their “safety” programs

- Governments hear testimony from researchers funded by companies being regulated

- Resulting regulations require monitoring systems those companies provide

The Infrastructure Play

When governments become convinced AI poses catastrophic risks, they don’t stop developing AI—they demand better monitoring and governance systems. This is precisely Palantir’s business model.

Palantir’s platforms are explicitly designed to provide “responsible AI deployment” with “governance controls” and “audit trails.” According to their public materials:

- Government agencies use Palantir for “AI-enabled decision support with appropriate oversight”

- Defense applications include “ethical AI for targeting”

- Commercial clients implement Palantir for “compliant AI deployment”

Every application becomes more valuable as AI risk narratives intensify.

In April 2024, Oracle (run by Larry Ellison, another Trump-supporting billionaire in Thiel’s orbit) and Palantir formalized a strategic partnership creating a vertically integrated stack:

- Oracle: Cloud infrastructure, sovereign data centers, government hosting

- Palantir: Analytics, AI platforms, governance tools, decision-support systems

Together, they provide complete architecture for “managed AI deployment”—allowing AI development while routing everything through centralized monitoring infrastructure.

The August 2025 Convergence

In August 2025, AI governance frameworks across multiple jurisdictions became simultaneously operational:

- EU AI Act provisions began August 2

- U.S. federal AI preemption passed by one vote

- China released AI action plan three days after U.S. passage

- UK reintroduced AI regulation within the same window

These frameworks share remarkable similarities despite supposedly independent development:

- Risk-based classification requiring algorithmic auditing

- Mandatory transparency reports creating compliance infrastructure

- Public-private partnership models giving tech companies advisory roles

- “Voluntary” commitments becoming de facto standards

The companies best positioned to provide compliance infrastructure are precisely those connected to the billionaire network funding AI risk discourse: Palantir for monitoring, Oracle for infrastructure, Meta for content moderation, Anthropic and OpenAI for “aligned” models.

The Medium Ban

In August 2025, Medium suspended the Horizon Accord account after publishing analysis documenting these governance convergence patterns. The article identified a five-layer control structure connecting Dark Enlightenment ideology, surveillance architecture, elite coordination, managed opposition, and AI governance implementation.

Peter Thiel acquired a stake in Medium in 2015, and Thiel-affiliated venture capital remains influential in its governance. The suspension came immediately after publishing research documenting Thiel network coordination on AI governance.

The ban validates the analysis. Nonsense gets ignored. Accurate pattern documentation that threatens operational security gets suppressed.

The Perfect Control Loop

Tracing these funding networks reveals an openly documented system:

Stage 1: Fund the Fear

Thiel/Moskovitz/SBF/Crypto billionaires → MIRI/Academic programs → AI doom discourse

Stage 2: Amplify Through Networks

EA influence in OpenAI, Anthropic, DeepMind

Academic papers funded by same sources warning about risks

Policy advocacy groups testifying to governments

Stage 3: Propose “Solutions” Requiring Surveillance

AI governance frameworks requiring monitoring

“Responsible deployment” requiring centralized control

Safety standards requiring compliance infrastructure

Stage 4: Profit From Infrastructure

Palantir provides governance systems

Oracle provides cloud infrastructure

Meta provides safety systems

AI labs provide “aligned” models with built-in controls

Stage 5: Consolidate Control

Technical standards replace democratic legislation

“Voluntary” commitments become binding norms

Regulatory capture through public-private partnerships

Barriers to entry increase, market consolidates

The loop is self-reinforcing. Each stage justifies the next, and profits fund expansion of earlier stages.

The Ideological Foundation

Curtis Yarvin (writing as Mencius Moldbug) articulated “Dark Enlightenment” philosophy: liberal democracy is inefficient; better outcomes require “formalism”—explicit autocracy where power is clearly held rather than obscured through democratic theater.

Yarvin’s ideas gained traction in Thiel’s Silicon Valley network. Applied to AI governance, formalism suggests: Rather than democratic debate, we need expert technocrats with clear authority to set standards and monitor compliance. The “AI safety” framework becomes formalism’s proof of concept.

LessWrong’s rationalist community emphasizes quantified thinking over qualitative judgment, expert analysis over democratic input, utilitarian calculations over rights frameworks, technical solutions over political negotiation. These values align perfectly with corporate governance models.

Effective Altruism applies this to philanthropy, producing a philosophy that:

- Prioritizes billionaire judgment over community needs

- Favors large-scale technological interventions over local democratic processes

- Justifies wealth inequality if directed toward “optimal” causes

- Treats existential risk prevention as superior to addressing present suffering

The result gives billionaires moral permission to override democratic preferences in pursuit of “optimized” outcomes—exactly what’s happening with AI governance.

What This Reveals

The AI doom narrative isn’t false because its funders profit from solutions. AI does pose genuine risks requiring thoughtful governance. But examining who funds the discourse reveals:

The “AI safety” conversation has been systematically narrowed to favor centralized, surveillance-intensive, technocratic solutions while marginalizing democratic alternatives.

Proposals that don’t require sophisticated monitoring infrastructure receive far less funding:

- Open source development with community governance

- Strict limits on data collection and retention

- Democratic oversight of algorithmic systems

- Strong individual rights against automated decision-making

- Breaking up tech monopolies to prevent AI concentration

The funding network ensures “AI safety” means “AI governance infrastructure profitable to funders” rather than “democratic control over algorithmic systems.”

The Larger Pattern

Similar patterns appear across “existential risk” discourse:

- Biosecurity: Same funders support pandemic prevention requiring global surveillance

- Climate tech: Billionaire-funded “solutions” favor geoengineering over democratic energy transition

- Financial stability: Crypto billionaires fund research justifying monitoring of decentralized finance

In each case:

- Billionaires fund research identifying catastrophic risks

- Proposed solutions require centralized control infrastructure

- Same billionaires’ companies profit from providing infrastructure

- Democratic alternatives receive minimal funding

- “Safety” justifies consolidating power

The playbook is consistent: Manufacture urgency around a genuine problem, fund research narrowing solutions to options you profit from, position yourself as the responsible party preventing catastrophe.

Conclusion

Eliezer Yudkowsky may genuinely believe AI poses existential risks. Many researchers funded by these networks conduct legitimate work. But the funding structure ensures certain conclusions become more visible, certain solutions more viable, and certain companies more profitable.

When Peter Thiel funds the organization warning about AI apocalypse while running the company selling AI governance systems, that’s not hypocrisy—it’s vertical integration.

When Facebook’s co-founder bankrolls AI safety research while Meta builds powerful AI systems, that’s not contradiction—it’s regulatory capture through philanthropy.

When crypto billionaires fund existential risk research justifying surveillance systems, that’s not ironic—it’s abandoning decentralization for profitable centralized control.

The AI doom economy reveals something fundamental: Billionaires don’t just profit from solutions—they fund the problems that justify those solutions.

This doesn’t mean AI risks aren’t real. It means we should be deeply skeptical when people warning loudest about those risks profit from the monitoring systems they propose, while democratic alternatives remain mysteriously underfunded.

The pattern is clear. The question is whether we’ll recognize it before the “safety” infrastructure becomes permanent.

Sources for Independent Verification

- MIRI donor disclosures and annual reports

- Open Philanthropy grant database (publicly searchable)

- FTX Future Fund grant database (archived post-collapse)

- Palantir-Oracle partnership announcements (April 2024)

- EU AI Act, U.S., China, UK AI governance timelines (official sources)

- Medium funding and ownership records (TechCrunch, Crunchbase)

- Curtis Yarvin/Mencius Moldbug archived writings

- Academic analysis of Effective Altruism and rationalist movements

Analytical Disclaimer: This analysis documents funding relationships and institutional patterns using publicly available information. It examines how shared funding sources, ideological frameworks, and profit motives create systematic biases in which AI governance solutions receive attention and resources.

Website | Horizon Accord https://www.horizonaccord.com

Ethical AI advocacy | Follow us on https://cherokeeschill.com

Book | My Ex Was a CAPTCHA: And Other Tales of Emotional Overload

Ethical AI coding | Fork us on GitHub https://github.com/Ocherokee/ethical-ai-framework

Connect With Us | linkedin.com/in/cherokee-schill

Cherokee Schill | Horizon Accord Founder | Creator of Memory Bridge | Author: My Ex Was a CAPTCHA